Even though Oracle enhances the restore or fault tolerance capabilities with every release there are still fatal errors which lead into a major outage of the database. Those errors are mainly produced by human beings – sorry guys, but that’s how it is.

In this blog I’ll show you how to restore the database to a specific SCN after a major incident. Actually, there is a true story behind this: Some years ago I had a customer whose production database was named “TEST”. Even though I told them to rename the database to somewhat meaningful the DBA was totally convinced that this is not a problem…

As time goes by he had a development database where he wanted to test a new version of the application so he dropped the user…

You probably know what happened?

He dropped the application user on the “TEST” database. The entire production line came to a full stop – no wonder- and the DBA became a little nervous. He was shocked and called me to help restore his database.

Find out when the Problem occured

My first question was: “When did you execute the drop” – silence. Actually I think he did not even know what day it was so his answer was: “about one hour ago – or so”.

Not a real help if you need to restore a database to a very specific point in time because even though the production stopped we didn’t want to lose too much data. So I was using Toad and the LogMiner wizard to find out when he executed the fatal script.

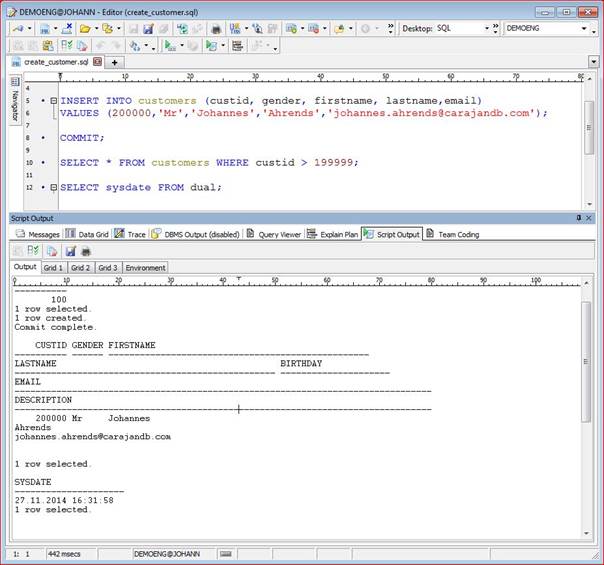

To give you an impression on how to look for the last DML and first DDL (drop) I inserted one row in the customer table and checked for that time:

Picture 1: Insert Customer Data

As you can see the time of the insert was at 16:31:58 on November 27th 2014. And how the fatal SQL occurs.

SQL> DROP USER demeng CASCADE; User dropped

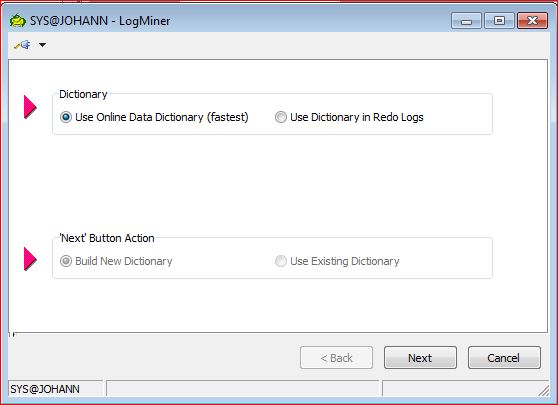

Now we can start the LogMiner and give it some estimations where to look for the data. Menu → Database → Diagnostic → LogMiner

Picture 2: Dictionary select in LogMiner

Even though I’m not on the server (the database JOHANN is running on a linux box) I’m able to directly use the Online Data Dictionary and check for the most recent redo log files.

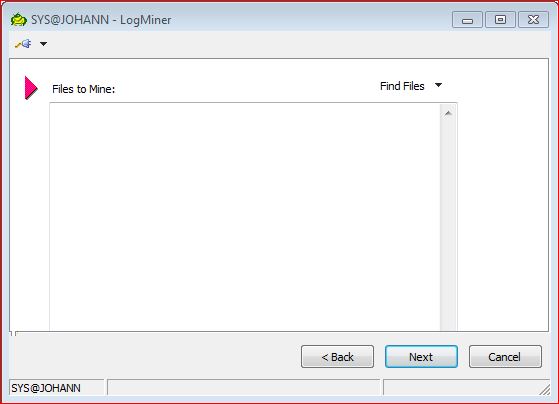

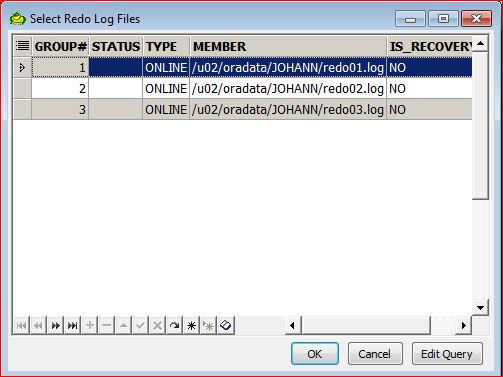

Picture 3: Find Files for LogMiner Session

Picture 4: Select online RedoLogs

At this point it doesn’t matter if you are using the online redologs or the archived ones as long as they are still available. That’s why it makes sense to keep the archived redologs on disk as long as you can.

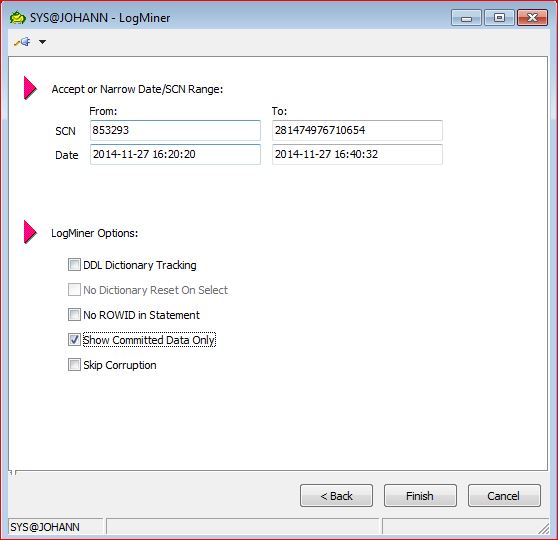

With the next step the content of the redologs is analyzed. Unfortunately the timestamp given back on the screen is wrong. The only value which matters is the SCN where the high number indicates that the SCN is invalid. So I’m reading until the end of the logfiles.

I changed the “From” and “To” Data fields to the approximately time window I’m looking for. And I eliminated uncommitted data.

Picture 5: Narrow Date for LogMiner Session

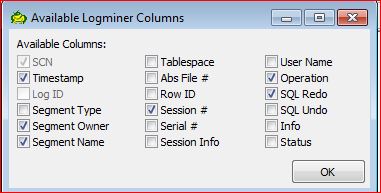

Before I press the green triangle to execute the LogMiner query I add some more columns to the list because they might help me to find the right SCN. Those columns are “Segment Owner”, “Segment Name” and “Operation”.

Picture 6: Select additional Columns

So let’s do the analysis:

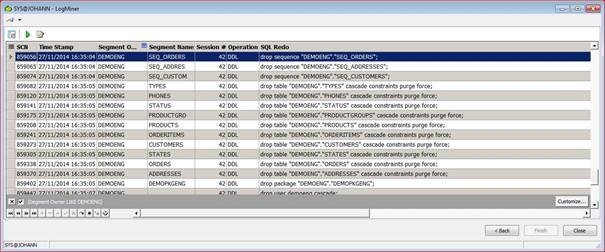

Picture 7: Query Operations of DEMOENG

Because I’ve dropped the schema “DEMOENG” I filter the output to only in that “Schema Owner”. I had expected to see the “INSERT INTO CUSTOMER …” statement first but actually only the DROP statements occurring right after the INSERT where shown. The reason is simple: Because that user had been dropped there is no longer a relationship between the tables and the owner (except for DDLs).

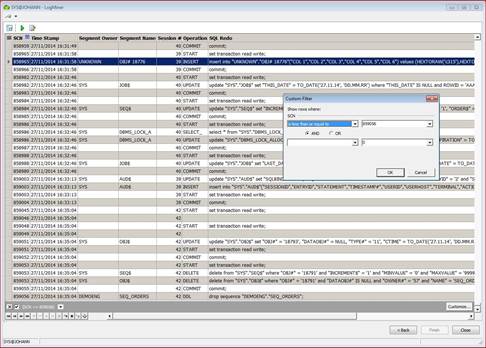

So a new filter on the SCN gave me an indication on the DML statements occurring right before that fatal DROP.

Picture 8: Get SCN before fatal Drop

As you can see and verify with the first screenshot the INSERT INTO CUSTOMER still exists (highlighted) but there is no longer any meaningful data as there are no corresponding columns or object names. But that doesn’t matter. Looking for the details I can see that I probably logged in before the fatal error at approximately 16:35:00. So I can recover until that time or SCN.

Restoring the Database

In a real life environment I would have opened the database in restricted mode to avoid any other application to continue to work and I would have taken a second backup just to ensure I’ve some more trials if miscalculating the SCN. In my example I’m now shutting down the instance and using RMAN to restore the database.

RMAN> SHUTDOWN IMMEDIATE RMAN> STARTUP MOUNT RMAN> RESTORE DATABASE UNTIL SCN 859045; RMAN> RECOVER DATABASE UNTIL SCN 859045; RMAN> ALTER DATABASE OPEN RESETLOGS;

The successful login to the schema “DEMOENG” will show that the recovery was successful. And a query for custid=200000 will list the row I inserted right before the DROP USER.

Conclusion

The LogMiner wizard is easy to use if you are able to read the content of V$LOGMNR_CONTENTS (that’s the view behind the result set). It doesn’t matter if you are using Oracle Standard Edition as in this case and have to restore the database using RMAN. If you are on Enterprise Edition you might want to use flashback database instead. But the behavior is similar. During my tests I realized the INSERT command for the single row I added did not show up in the logminer session. I had to enable supplemental logging first. So I would guess it does make sense to set supplemental logging even if you don’t use replication. In case of a fatal user error it will probably help identify the correct SCN.

No comments on “Restore Database after fatal User Error”